'They're coming for our jobs!'

Just for fun… I take a look at what progress in Artificial Intelligence software and Robotics could mean for the distant, and not so distant future of Parkour & Freerunning.

Computers have been better than humans at all sorts of narrow tasks such as calculations and product assembly for decades now. More recently with the rise of a particular type of software design called ‘neural networks’, computers have started to venture into more complex tasks. We’ve come from beating the best humans at board games like Chess and Go, right up to driving cars with a lower crash rate than humans, and beyond into the realms of real-time language translation. But what does any of this have to do with Parkour? It may change the way the sport looks entirely - allow me to explain!

But in order to try to explain I’ll have to go back to Chess and Go for a moment. Go is an ancient chinese board game, but with so many possible move combinations that it would be impossible for a computer to calculate the best move to make through ‘brute force’ - which is testing every possibility individually and seeing which is the best one.

Beating the best humans at Go was considered by many computer scientists to be the holy grail of 'narrow' AI for so long for this reason, and some thought it would take decades to achieve. (Narrow AI is just AI designed with one task in mind.)

That was until 2016 when a team at Google did just that with their program ‘AlphaGo’. They used a ‘neural network’ architecture - which in the simplest terms possible is software designed to emulate how the human brain works - so the program could improve itself through learning from its mistakes.

They showed it some examples of professional humans playing Go, so that it had some model of the game to work from, then had it play against itself 30 million times on Google’s servers. The end result had learned enough about the game just from incrementally improving upon its mistakes to beat Lee Sedol - the best Go player in the world 4 - 1 in a best of 5 match.

What was so spectacular about this was that, AlphaGo had somehow developed a correct ‘intuition’ of the sorts of moves it should explore, seeing as it had no way of calculating all the possibilities. In the process it had to innovate; it didn’t just play better than humans in some narrow way, it literally had to strategise in ways humans hadn’t thought of before! Keep this in mind, I’ll get back to it later!

Cool, that’s all pretty clever. So… Parkour? Yup, we just need a couple more ingredients.

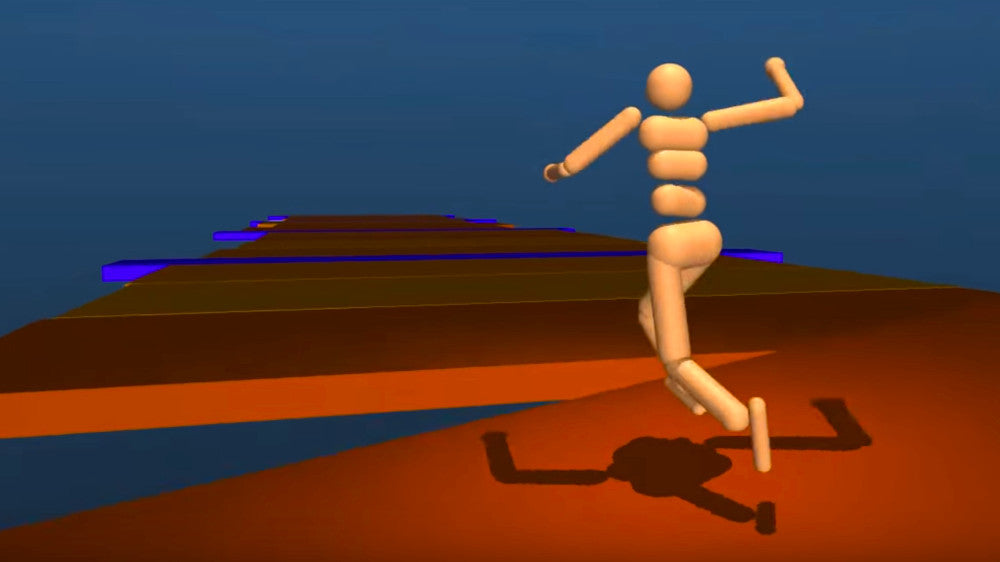

One of the incredible things about neural networks and deep learning is that it can be applied to so many different things. All it needs is an input, and some kind of ‘goal’, and it can get to work on improving itself with respect to that. In the video above the coders made a simple physics engine, created a rudimentary stick-figure dude with some basic rules about how its body can move, and then told it to go as far forward as it can.

Obviously the results are pretty comical looking! But you’ve gotta remember that you’re looking at something that wasn’t ‘programmed’ how to run in the traditional sense. The program figured out how to move by itself, by trying different 'muscle' movements in just the right sequence so that the character would make progress forward. What you’re looking at is many hours of progress, starting from randomly falling over immediately, up to actually being able to jump some gaps!

That’s actually an important point - again in layman's terms, part of the way this all works is the program tries a small ‘random’ change to what it does whenever it fails, and if the outcome is worse after the change it abandons that change and goes back to the version before. And if the random change improves the score slightly it adopts that as the new version of the program. A bit like how natural selection works.

I feel like the arm movements look so bizarre because out of all the random changes it tried, changing up the arm movements didn’t have much impact on the outcome compared with random changes to the leg movements. So you’re left with the random arm movements of the first iteration of the program.

That’s all well and good for stick figures, but what about more realistic feeling things?

This team of guys used neural networks to try and build stable internal ‘muscular systems’ for a range of different size block characters. The results are seriously impressive, and a couple of years old now as well - so who knows how much better they are now. Again, the program is essentially redesigning how the blocks work in relation to one another in order to emulate stable and realistic looking movement.

So what if you combined these two principles? What if you tried to design a highly accurate model of a human body, down to skeleton and muscular structure? Then used that model of a human to run another program with the goal of something like ‘Go from A to B as quickly as possible’?

Of course at the moment any attempt at that would look similarly comical and ridiculous, and probably wouldn’t yield anything useful at all. But think of this technology in 5, 10, 15 years time. If it could be achieved I think an analysis of human movement in this AI environment could potentially change parkour massively. Here’s why I think that...

When AlphaGo beat Lee Sedol at Go, that was just the beginning. Later iterations of the program like AlphaZero have since improved on that performance, and with a fraction of the computation time needed, and even without any initial training! This rapid progress lead to the human grandmasters of the game to look into what these programs were doing so right?

They started to study the techniques AlphaGo and AlphaZero used to win, and learned how to use some of them themselves. It’s apparently gotten to the point where unless you’ve studied these computer vs computer games, you don’t even really stand a chance in traditional human tournaments, because so many players have learned from the computer’s innovation and implemented it into their own game. The exact same thing happened with Chess and Chess players too.

I think the same could happen with parkour and maybe even freerunning. With an accurate human model and lots of computing time, who knows what crazy innovations and possibilities in movement could be discovered?

Although I think it’s fair to say that the change wouldn’t be as dramatic as it was for Go and Chess - because so much of parkour is mental, and about overcoming fear. Computer models of simulated characters doing ‘realistic’ parkour would show us what’s realistically possible for the body, but nothing of people’s willingness to actually go for it! (Short of computationally modeling an entire human psyche…?)

Nonetheless, there could be some seriously surprising innovations. Once upon a time no one even really did strides, and if a movement technique as fundamental as that could have laid dormant for so long, maybe some other staple of every traceurs toolkit is also waiting to be discovered? I don’t even have to mention how many possible new flips that have never been done such a computer program would figure out.

Maybe that’s all fun and hypothetical. But then you look at what Boston Dynamics are doing with their robotics hardware. They’ve come a long way in a relatively short amount of time, check out this video where they successfully backflip an 6ft tall robot. Again, imagine this plus 10 years or so. It’s already scary right now if you ask me, but in 10 years I’m sure its ability to walk around will be uncanny.

When these two hypothetical worlds collide, we could see very dexterous robots, programed with the ability to do parkour maneuvers we couldn’t even envision today. It only gets more advanced the more years you extrapolate from there - potentially innovating in real time, coming up with novel solutions to movement problems in mid-flight.

My prediction: a specifically designed robot can beat any human in a speed competition by 2038. And win any points-based style comp by 2048!

- - - - -

Haha, I hope you enjoyed the article! Let me know what you want to see more of in the comments. Apologies to any computer scientists out there if I butchered any of the concepts there!

References:

AlphaGo - https://en.wikipedia.org/wiki/AlphaGo

Deep Learning - https://www.youtube.com/watch?v=TnUYcTuZJpM

Learning Locomotion - https://www.youtube.com/watch?v=14zkfDTN_qo

Making Muscles - https://www.youtube.com/watch?v=pgaEE27nsQw